The Birth of Application Server Boilerplate

One of the most exciting tasks for our team in the last month was to create a new application server “boilerplate” that would be used as a basis for most of our growing data-processing products, as well as for other people and companies who are interested in implementing an asynchronous server in Python to their products. And, as you will learn, the result lies beyond our original expectations.

First, it is important to know that we were facing difficulties in deciding what to do with the backend of the SeaCat Admin Panel and the Black Swan Pump (aka BSPump), which started to go in separate directions, each with its own application background. Both of them are successful products focused on asynchronous and secure data processing, which need to be carefully maintained in order to stay up-to-date and allow us to promptly respond to our clients when an anomaly or other incident occurs.

After a series of discussions, the decision was finally made by our leader, Ales, who suggested we do a common one-day workshop to create an application server, which is then going to be used by most of our products and utilize most of today’s asynchronous programming capabilities. After a brainstorming session, the meeting for the workshop was planned and appointed.

Picture: ASAB Architecture

When the workshop started at our TeskaLabs Prague office in the morning (around 10 AM), we (the four developers on the Green Team) were all working together to create the basic application object, which would then hold other modules and services that every right application boilerplate needs. Personally, I then decided to create a publish-subscribe messaging object that would notify registered listeners from other components when a specified event occurred, i.e., if the application was initialized, the output data queue is full, etc.

In the meantime, other developers from our Green Team were assigned tasks to create the remaining basic components. Mila took care of the logging object, which is particularly necessary for our ITGuard product. Honza was focusing on the configuration object, which would keep settings of all the application’s components. When we were coding the application, Ales explained to us how the asynchronous part of the application is going to be implemented. We were having quite a fun time trying to manage all the asynchronous coroutines that are crucial for future implementations of real-time stream processors.

From day one, we decided to implement this piece on the GitHub as an open-source project so you can see our progress in Git history :-) Personally, I enjoyed the coding and testing of the publish-subscribe class together with all the coroutines, awaits, and other Python asyncio stuff. Our job was proceeding surprisingly smoothly, especially since we were helping one another.

After a few days, using our freshly baked Asynchronous Server Application Boilerplate (ASAB), we started implementing the Black Swan Pump—the big data real-time stream processor, which is open-source and public as well. Not only was expanding the ASAB to match the BSPump needs successful, it was also fast and organized to the extent that an outsider programmer might immediately learn what all the newly created modules and services actually do.

When a new version of Python or its libraries is available, it is just a matter of seconds to modify necessary dependencies in the ASAB section and all of our products will be instantly updated. The asynchronous part is also fast and can be instantiated on multiple CPU cores or even computers. That’s the advantage of modern asynchronous programming, which dwells in the fact that there is no need to spend time and computing resources on handling race conditions, deadlocks, and other pesky confusing stuff that goes with parallel thread programming. I was surprised how easy it had been to create such a flexible and modern boilerplate that can be used by lots of successive products.

You now probably excited to learn more about what we have been doing with the ASAB server, so next time I will focus on the previously mentioned BSPump project, how it came to be, along with related stream data analysis, transformations, and data mining.

If you are more interested in the ASAB boilerplate and its features, please see our code at GitHub https://github.com/TeskaLabs/asab, use it in your own software or, even better: contribute to it. You can get in touch with us at info@teskalabs.com or on Gitter chat. We will be happy to hear your thoughts and feedback!

Continue to next article

Most Recent Articles

You Might Be Interested in Reading These Articles

Don't worry, ASAB and the universe can be fixed

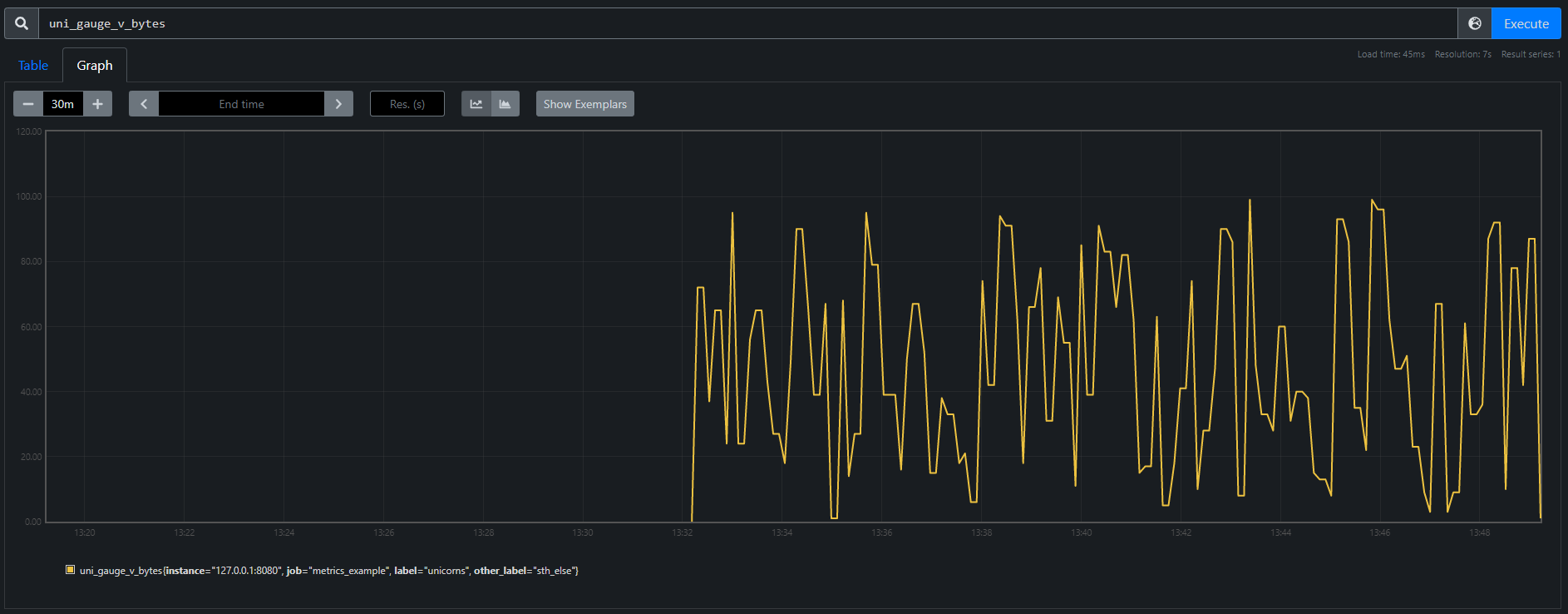

How do my first sprints in TeskaLabs look like? Sheer desperation quickly turns into feelings of illumination and euphoria. And back. I've also made a few new friends and learned a thing or two about flushing metrics. The digital adventure begins.

Published on February 15, 2022

Why Developers Are Boosting Up Their Mobile Application Security?

Mobile application security is a significant issue for developers. Most try their best to make mobile apps secure and safe for their users. Here are some of the other reasons why developers are boosting up their mobile application security.

Published on April 14, 2015

SeaCat tutorial - Chapter 3: Introduction to REST Integration (iOS)

The goal of this article is to extend the knowledge and develop an iOS application which is able to comunicate with REST interface provided by Node.js that we are going to create as well. A full integration with SeaCat is essential for information security of our example.

Published on October 07, 2014