Building the Correlator #1: Analyzing long series of cybersecurity data with window correlation

In a cybersecurity system, there are usually tens of thousands of messages transmitted every second, each containing information about user behavior, device status, connection attempts, and other activities performed in a network infrastructure. These messages are called logs, and they are produced not only by the servers themselves, but by all kinds of applications running on those servers, as well as network devices such as firewalls.

For information to be extracted from the messages, logs first need to be parsed to a unified schema, so that attributes from different types of applications are stored using the same field name.

For example, any username from any log source should be stored with the field user.name.

Only when parsing is done properly can we analyze messages or events to detect security incidents.

Most detection and correlation rules focus on detecting events with attributes that require higher caution. Consider failed login attempts. If a log message contains information about a failed login attempt for a given user, the detection is triggered, and thus an alert in the form of an email is sent to the system administrators. However, such a simple detection would trigger many unimportant alerts, because the user could have just typed the password incorrectly on their first try. Higher caution should be taken if, for instance, a user typed their password incorrectly ten times during the last five minutes. Here, the alert to the system administrator makes more sense, because this behavior is more likely to indicate that someone is trying to get into a user account that they don't have access to. The events thus need to be analyzed in a time window that specifies how many of an event should occur and in what length time period in order to trigger an alert.

How can a time window be implemented?

Well, a time window should be able to move back and forth across the data series to detect all possibilities, even when a late or early event arrives. In order to do so, we need to keep the data series in system memory (RAM), which can be very demanding on the computer’s resources. Remember, we are talking about tens of thousands of events per second. Yes, not all events are stored in the data series, since we are filtering only events that require higher caution, but there may nonetheless be tens or hundreds of detection rules inside the same process, each having its own processed series. There are two steps that I took to solve the memory issue and which I want to describe to you know.

First, I needed to create an algorithm that would store only parts of the timed data series, when there are actual events present for analysis, because like in almost every real-time data series, there are time gaps. The type of events being filtered for by a detection rule don't occur every second; There are significant amounts of time that can pass between them. Storing such gaps would consume much more memory than just storing parts of the series, where there are actual events located. Ideally, when there are no events, there would be no data stored in the memory, just the information that the data series is empty from the start time of the window correlator application. When a new event comes, a new segment is be created, that represents a part/segment of the data series with its start time being just before the time of the event, and its end time determined by the segment’s length.

The data series is represented by a row object that is specific for the given correlation rule and the dimension for which the rule performs the detection, such as the user name. The row is composed of segments remembering the events or the information extracted from them for a given timespan. This is it from the memory perspective. From the time perspective, there are gaps between the end time of the first segment and the start time of the second segment, etc. When an analysis is performed, the time window calculates the time span of the gap to say that, for instance, there was one minute of no activity from the perspective of the given correlation rule and the dimension. Thus, when the rule detects user activity in the last five minutes, it would calculate the metrics such as event count only from the four minutes which did contain the data.

Addressing the question of storage

All right, so this solution saves memory and some performance for iteration over the data, but it is not enough if you want to store and analyze data from weeks or months ago. That is one of the reasons we decided to store the data series in files, partially mapped to the actual process memory. The second reason is persistence, because if you have the data series stored solely in the process’s memory, you would lose all the data every time the window correlator process/application restarts. There are actually multiple approaches on how to store the data in files that require more or less algorithmical knowledge, but after some discussion and because of the nature of the issue, such as multiple random accesses to the memory each second, we decided to use the native low-level MMAP functionality.

On Unix systems, we can use MMAP or memory-mapping directly in C. It works similarly to malloc, which is a function used to dynamically allocate memory in the heap of a process, but on top of that, we specify the preallocated file we want to store or mirror the data in. The storage of the data works in a similar manner to swap, so you can be sure that the most requested memory pages are located directly in the memory. MMAP, like malloc, returns a pointer to the memory whose size matches that of the mapped file (you can map a memory smaller than the file size, but it would introduce an unnecessary overhead). Look at the following code snippet:

void * memory_content = mmap(

NULL,

file_size,

PROT_READ | PROT_WRITE,

MAP_SHARED,

file_descriptor,

0

);There is a flag in MMAP function attributes called MAP_SHARED, which says that the memory is mirrored in the file and can be shared among multiple processes.

The mapped memory is the same as file_size and can be used directly through the memory_content pointer to store and load the actual segments.

When the application is restarted, the MMAP will provide a pointer to the same memory with the same data already written in the previous run.

This way, we are not losing any information already obtained through evaluation and analysis of the incoming events.

That's how we solved the main issue of storing long-term data on a large scale for the time window in the memory. There is, however, one more problem. If there is a reference to a pointer stored in the mapped memory, you will lose it after restart of the window correlator application. That is why all structures stored in the memory should contain offset information in the mapped memory/file rather than pointers to it. If you want to read about that, I'll explain it in my next article, where I describe the architecture of the time window.

Want to know more about the Correlator?

If you're looking for a way to explain event correlation to a beginner, check out our introduction to the Correlator.

You Might Be Interested in Reading These Articles

The Top 5 Mobile Application Security Issues You Need to Address When Developing Mobile Applications

Most recently, a lot of established companies like Snapchat, Starbucks, Target, Home Depot, etc. have been through a PR disaster. Do you know why? Simply because some attackers out there found flaws in their mobile apps and could exploit them. In fact, by the end of this year, 75% of mobile apps will fail basic security tests.

Published on November 03, 2015

The Most Prevalent Wordpress Security Myths

WordPress web development may not seem like a challenging task. Since this platform has been in existence, a wide range of Web developers have handled projects of this nature. However, the top web development companies are not always aware of the issues and problems that take place when it comes to security.

Published on April 15, 2019

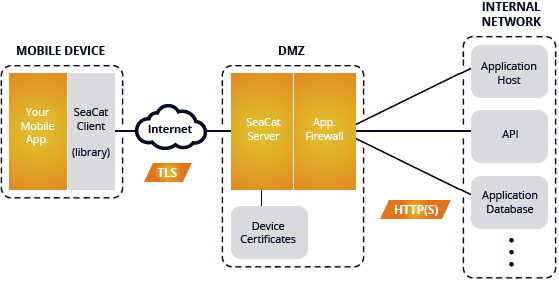

Software architect's point of view: Why use SeaCat

I've recently received an interesting question from one software architect: Why should he consider embedding SeaCat in his intended mobile application? This turned into a detailed discussion and I realised that not every benefit of SeaCat technology is apparent at first glance. Let me discuss the most common challenges of a software developer in the area of secure mobile communication and the way SeaCat helps to resolve them. The initial impulse for building SeaCat was actually out of frustration of repeating development challenges linked with implementation of secure mobile application communication. So let's talk about the most common challenges and how SeaCat address them.

Published on April 16, 2014